Upload From Your Local

BlendVision provides the capability to upload video files directly from your local device for the encoding jobs.

For more details on the accepted formats, please refer to the support formats. Note that the maximum supported file size is 70GB.

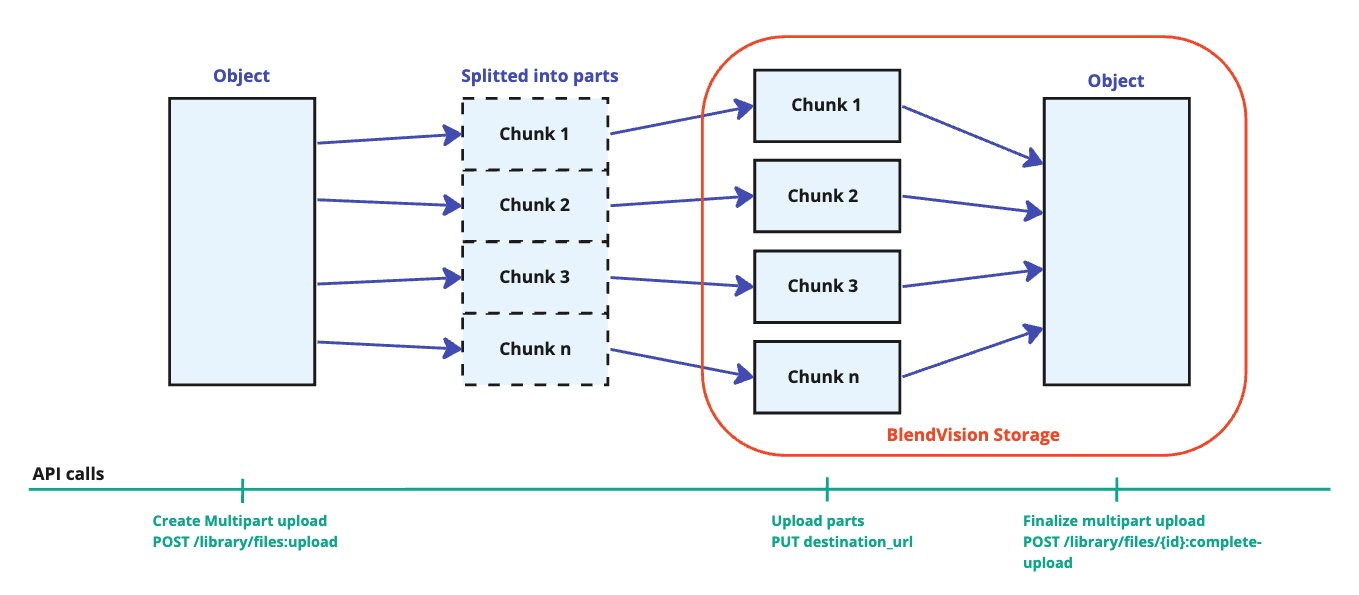

To facilitate efficient and smooth uploads, your file should be partitioned into specified parts and utilize the provided presigned_url for uploading each segment through the PUT method.

Each part is a contiguous portion of the file's data. You can upload these object parts independently and in any order.

Achieving uploads for large files requires these four steps:

- Initiate multipart upload for your file

- Logically split your file into byte-range chunks

- Upload the chunks in parallel

- Assemble the chunks to create the larger file

Here are the steps to complete this process:

Create Multipart Upload

Upload URL is an on-demand presigned location where you can place your file.

To obtain the URL for loading a file, make a POST request to the following API with the name and size of your file specified in the request body:

POST /bv/cms/v1/library/files:upload

Here is an example of the request body:

- VOD

- AOD

"file":{

"type":"FILE_TYPE_VIDEO",

"source":"FILE_SOURCE_ADD_VOD",

"name":"file_name",

"size":"file_size_in_bytes"

}

"file":{

"type":"FILE_TYPE_AUDIO",

"source":"FILE_SOURCE_ADD_AOD",

"name":"file_name",

"size":"file_size_in_bytes"

}

When you request to upload a file that exceeds 100MB, multiple URLs will be provided and you will need to split your file into pieces and upload them onto each URL separately (5MiB-5GiB per each, no limit for the last piece).

Here's an example of the response:

{

"file":{

"id":"library-id",

"upload_data":{

"id":"upload-id",

"parts":[

{

"part_number":0,

"presigned_url":"string"

},

{

"part_number":1,

"presigned_url":"string"

}

]

}

}

}

In the response:

- The

idwithin thefileobject represents the library ID of the file. - The

idinside theupload_dataobject denotes the upload ID of the file.

Split and Upload Parts

It's recommended to upload these parts in parallel but with a restricted capacity to ensure efficient and smooth uploads. To illustrate the process, consider the following JavaScript code example:

// Imports necessary modules and libraries for file upload functionality.

import axios from 'axios';

// Define a simplified CustomFile type with only the used fields.

export type CustomFile = {

size: number;

name: string;

} & File;

// Interface for uploader parameters, detailing the structure of the expected input.

export interface UploaderParams {

file: CustomFile;

type: FileType;

source: FileSourceType;

onError: (error: unknown) => void;

onComplete: (result: CompleteUploadFileResponse) => void;

onProgress?: (progress: number) => void;

}

// Interface for the parts of the file being uploaded.

export interface Part {

etag: string;

part_number: number;

}

// Interface for the response received upon completing the file upload

export interface CompleteUploadFileResponse {

file: FileResponse;

}

// Interface for the response containing file details

export interface FileResponse {

id: string;

uri: string;

}

// Interface for the data sent to initiate an upload

export interface UploadFileData {

file: {

name: string;

size: string;

type: FileType;

source: FileSourceType;

attrs?: Record<string, any>;

};

}

// Interface for each part of the file that needs to be uploaded

export interface UploadPart {

part_number: number;

presigned_url: string;

}

// Interface for the response received upon initiating the file upload

export interface UploadFileResponse {

file: UploadFileData & {

id: string;

uri: string;

};

upload_data: {

id: string;

parts: UploadPart[];

};

}

// Axios instance for API requests

const AxiosInstance = axios.create({

baseURL: 'https://api.one.blendvision.com', // Replace with your API base URL

});

// Types for file sources types

export enum FileType {

Video = 'FILE_TYPE_VIDEO',

}

export enum FileSourceType {

Aod = 'FILE_SOURCE_ADD_AOD',

}

// API call to cancel an upload

const cancelUploadFile = (id: string, { upload_id }: { upload_id: string }) =>

AxiosInstance.post(`/bv/cms/v1/library/files/${id}:cancel-upload`, { upload_id });

// API call to complete an upload

const completeUploadFile = (data: { id: string; complete_data: { id: string; parts: Part[] } }): Promise<CompleteUploadFileResponse> =>

AxiosInstance.post(`/bv/cms/v1/library/files/${data.id}:complete-upload`, { complete_data: data.complete_data });

// API call to initiate an upload

const uploadFile = (data: UploadFileData): Promise<UploadFileResponse> =>

AxiosInstance.post('/bv/cms/v1/library/files:upload', data);

// Class definition for handling multipart file uploads.

class MultiPartUploader {

// Class properties for storing file details, upload progress, and callback functions.

file: CustomFile;

type: FileType;

source: FileSourceType;

parts: Part[] = [];

onComplete: (result: CompleteUploadFileResponse) => void;

onError: (error: Error) => void;

onProgress?: (progress: number) => void;

fileId = ''; // ID of the file being uploaded

uploadId = ''; // ID of the upload session

uploadPartSize = 0; // Size of each upload part

totalUploaded = 0; // Total bytes uploaded so far

// Constructor initializes the class properties with provided parameters.

constructor({ file, type, source, onProgress, onError, onComplete }: UploaderParams) {

this.file = file;

this.type = type;

this.source = source;

this.onProgress = onProgress;

this.onError = onError;

this.onComplete = onComplete;

this.parts = [];

this.fileId = '';

this.uploadId = '';

this.uploadPartSize = 0;

this.totalUploaded = 0;

}

// Main method to start the upload process.

async upload() {

try {

// Only proceed if a file has been provided.

if (this.file) {

// Extracting name and size from the file object.

const { name, size } = this.file;

// Initial API call to get upload IDs and part information.

const {

file: { id: fileId },

upload_data: { id: uploadId, parts },

} = await uploadFile({

file: {

name,

size: size.toString(),

source: this.source,

type: this.type,

},

});

// Setting up class properties with the API response for further use.

this.fileId = fileId;

this.uploadId = uploadId;

this.uploadPartSize = parts.length ?? 0;

// Calculating the size of each chunk based on total file size and part count.

const chunkSize = this.file.size / this.uploadPartSize;

// Collect all upload promises

const uploadPromises = parts.map((part, i) => {

const { part_number, presigned_url } = part;

const chunk = this.file.slice(chunkSize * i, chunkSize * (i + 1));

return this.uploadParts({ presignedUrl: presigned_url, partNumber: part_number, chunk });

});

// Wait for all parts to upload

await Promise.all(uploadPromises);

// Finalize the upload process once all parts are uploaded

this.completeUpload();

}

} catch (error) {

// Error handling during the upload initialization.

this.onError(error as Error);

}

}

// Method for uploading individual parts of the file.

async uploadParts({ presignedUrl, chunk, partNumber }: { presignedUrl: string; chunk: Blob; partNumber: number }) {

try {

// Check to ensure that the uploadPartSize is valid.

if (this.uploadPartSize === 0) {

throw new Error('upload part size error');

}

// Perform the PUT request to the presigned URL with the file chunk.

const data = await AxiosInstance.put(presignedUrl, chunk, {

headers: { // Setting necessary headers for the upload request.

'Content-Type': 'application/octet-stream'

},

onUploadProgress: progressEvent => { // Track the progress of the upload.

let progress: number;

// Check if total is defined

if (progressEvent.total) {

progress = (progressEvent.loaded / progressEvent.total) * chunk.size;

} else {

// If total is undefined, use loaded bytes directly

progress = progressEvent.loaded;

}

this.totalUploaded += progress - (this.totalUploaded % chunk.size);

// Call onProgress callback with calculated progress

if (this.onProgress) {

this.onProgress(Math.floor((this.totalUploaded / this.file.size) * 10000) / 100);

}

}

});

// Add the uploaded part details to parts array

this.parts = [...this.parts, { etag: data.headers.etag ?? '', part_number: partNumber }];

} catch (error: unknown) {

// Handle any errors during the part upload process.

this.onError(error as Error);

}

}

// Finalizes the upload process by sending a completion request to the server.

async completeUpload() {

try {

// Ensure that fileId and uploadId are valid before proceeding.

if (!this.fileId) {

throw new Error('No file id');

}

if (!this.uploadId) {

throw new Error('No upload id');

}

// Call the completeUploadFile API with necessary data to finalize the upload.

const data = await completeUploadFile({

id: this.fileId,

complete_data: {

id: this.uploadId,

parts: this.parts,

},

});

// Call the onComplete callback with the final response data.

this.onComplete(data);

} catch (error) {

// Handle any errors during the upload completion process.

this.onError(error as Error);

}

}

// Aborts the upload process and cancels any ongoing HTTP requests.

async abort() {

try {

// Call the cancelUploadFile API to notify the server of the cancellation.

await cancelUploadFile(this.fileId, { upload_id: this.uploadId });

} catch (error) {

this.onError(error as Error);

}

}

}

// Export the MultiPartUploader class for external use.

export default MultiPartUploader;

Key functionalities in the sample code:

- Chunked File Upload: The file is divided into smaller parts or chunks, which are uploaded separately. This method is beneficial for large files, improving reliability and allowing for resume of uploads in case of failure.

- Error Handling and Progress Reporting: The mechanism is provided to report upload progress and handle errors gracefully. It attempts to ensure that the application remains responsive and the user is informed of the upload status.

- Abort Capability: The ability to abort the upload process is crucial for user control, especially for large uploads that might need to be stopped by the user.

Finalize Multipart Upload

After uploading all pieces of the file to the URLs as obtained in the first step, send a registration request for the file using the following API:

POST /bv/cms/v1/library/files/{id}:complete-upload

Replace the path parameter id with the library ID of uploading file.

Here's an example of the request body:

"complete_data":{

"id":"upload-id",

"parts":[

{

"etag":"uploaded-part-ETag",

"part_number":1

}

]

}

The parts array in the request contains objects that detail each uploaded part, specifying its ETag and the associated part number.